How InSpace's Machine Learning-Based Toxicity Filter Identifies and Blocks Potentially Harmful Messages

Our toxicity filter uses machine learning to identify potentially harmful or toxic messages. If a participant types a toxic chat message, they will be notified when they try to send the message to the group and the chat will be blocked. At their discretion, hosts may toggle this feature off to accommodate the needs of their classroom setting.

In any online community, toxicity can be a major issue that harms user experience and engagement. At InSpace, we take this issue seriously, which is why we developed a machine learning-based toxicity filter to identify and block potentially harmful messages. Unlike simple word-based filters, our technology considers the context of the message to better identify toxicity.

Our toxicity filter uses machine learning to identify potentially harmful or toxic messages. If a participant types a toxic chat message, they will be notified when they try to send it to the group, and the chat will be blocked.

A simple way to identify toxic comments is to check for the presence of a list of words, including profanity. We did not want to identify toxic messages just by the words in the message, we also wanted to consider the context. We used machine learning to accomplish that goal.

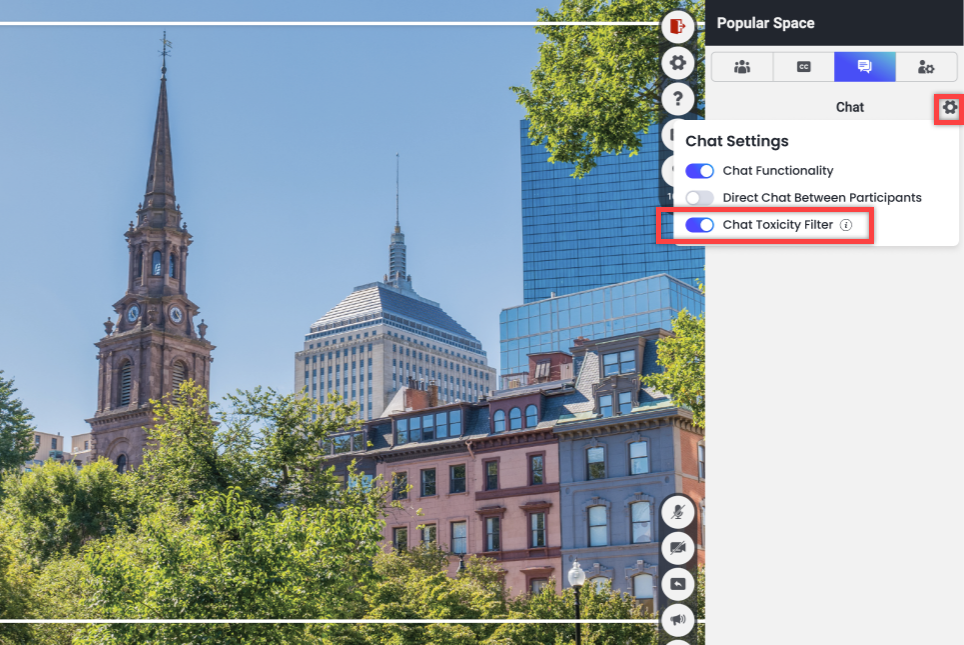

Hosts can toggle the chat toxicity filter functionality on and off. The chat toxicity filter will be turned on by default so users cannot send toxic messages. When a participant attempts to send a toxic message, they receive a private warning and cannot send the message. Hosts are not notified of unsent toxic messages.

Important to know:

- A host's chat toxicity preference will be set as the default for all other sessions

- Hosts will be notified if a co-host turns the chat toxicity filter on or off during their session

At InSpace, we believe in providing a safe and inclusive environment for all users. Our machine learning-based toxicity filter is one way we achieve this goal, by identifying and blocking potentially harmful messages. Hosts can rest assured that their community is protected, and users can enjoy a positive and respectful chat experience.